Embedding - Traditional Methods

Machine learning algorithms cannot work with the raw text data. So it must, first, be converted into binary codes of 1s and 0s. This is process is popularly known as feature extraction of text data.

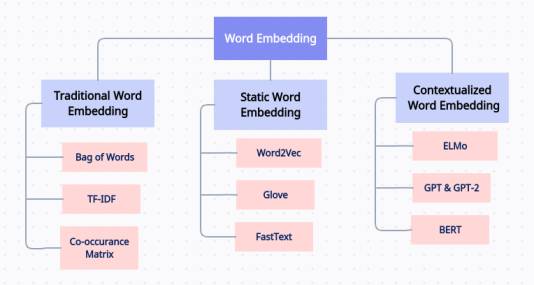

Some widely used methods for feature extraction of text data are listed below:

Here, we will be discussing only about Traditional approaches of word embedding. The remaining two will be discussed separately as different posts.

Bag of words (BoW)

Bag of Words model is the simplest and most popular form of word embedding. The key idea of BoW models is to encode every word in the vocabulary as one-hot-encoded vector.

If r1, r2 and r3 be three records, the vectors corresponding to r1, r2 and r3 be v1, v2 and v3 respectively such that r1 and r2 are more similar to each other as compared to r3. Then, as general understanding, the vector distance between v1 and v2 is less than that between v1 and v3 or v2 and v3.

distance (v1, v2) < distance (v1, v3)

similarity (r1, r2) > similarity (r1, r3)

For easy understanding, let us consider a sweet example. Let there be three reviews for a product in ecommerce site as:

r1: This product is good and is affordable.

r2: This product is not good and affordable.

r3: This product is good and cheap.

Let’s see how BoW encodes the text data to machine compatible form. Follow along with the below points:

I. Construct a set of all the unique words present in the corpus:

{ this, product, is, good, and, affordable, not, cheap }

There are a total of 8 uique words in the set formed. So the size of the vector generated for each review will be 8 as well, with the index position starting from 0 and ending to 7 i.e.

{ 0: this, 1: product, 2: is, 3: good, 4: and, 5: affordable, 6: not, 7: cheap }

II. Construct a d-dimensional vector for each review separately:

Construct a d-dimensional vector (d being the vocabulary size) for each review. Each index/dimension of the vector corresponds to a unique word in the vocabulary. The value in each cell of the vector represents the number of times the word with that index occurs in the corpus.

| d | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|---|

| v1 | 1 | 1 | 2 | 1 | 1 | 1 | 0 | 0 |

| v2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 |

| v3 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 1 |

Table : 8 dimensional vector representation of each review

Objective

Similar texts (reviews, in this case) must result closer vector.

distance(v1-v2) = √((1-1)²+(1-1)²+(2-1)²+(1-1)²+(1-1)²+(1-1)²+(0-1)²+(0-0)²) = √2

distance(v1-v3) = √((1-1)²+(1-1)²+(2-1)²+(1-1)²+(1-1)²+(1-0)²+(0-0)²+(0-1)²) = √3

The Euclidean distance between vectors v1 and v2 is less than that between v1 and v3. However the meaning of review r1 is completely opposite to that of review r2. Thus, BoW does not preserve the semantic meaning of a words and fails to work when there is small change in the text statements.

There is another idea of BoW where we use booleans 0 and 1 instead of number of occurance of the word in the corpus, known as binary BoW. If the word exists at least once the corresponding cell is assigned a binary value 1, otherwise 0.

1 | from sklearn.feature_extraction.text import CountVectorizer |

Limitations

- Vector length is insanely large for large corpus.

- BoW results to sparse matrix, which is what we would like to avoid.

- Retains no information about grammar and ordering of words in a corpus.

TF-IDF

In NLP an independent text entity is known as document and the collection of all these documents over the project space is known as corpus. tf-idf stands for Term Frequency-Inverse Document Frequency. The entire technique can be studied by studying tf and idf separately.

Term-Frequency is a measure of frequency of appearance of term t in a document d. In other words, the probability of finding term t in a document d.

Inverse-Document-Frequency is a measure of inverse of probability of finding a document that contains term t in a corpus. In other words, a measure of the importance of term t.

We can now compute the tf-idf score for each word in the corpus. tf-idf gives us the similarity between two documents in the corpus. Words with a higher score are more important. tf-idf score is high when both idf and tf values are high. So, tf-idf gives more importance to words that are:

- More frequent in the entire corpus

- Rare in the corpus but frequent in the document.

Now this tf-idf score is used as a value for each cell of the document-term matrix, just like the frequency of words in case of Bag-of-Words. The formula below is used to compute tf-idf score for each cell:

While computing tf, all terms are considered equally important. However, it is known that certain terms, such as is, of, and, that, the, etc may appear a lot of times but have no or little importance. Thus we need to weigh down such frequent terms while scaling the rare ones up using idf.

| Term | tf (r1) | tf (r2) | tf (r3) | idf | tf-idf (r1) | tf-idf (r2) | tf-idf (r3) |

|---|---|---|---|---|---|---|---|

| this | 1/7 | 1/7 | 1/7 | 0.000 | 0.000 | 0.000 | 0.000 |

| product | 1/7 | 1/7 | 1/7 | 0.000 | 0.000 | 0.000 | 0.000 |

| is | 2/7 | 1/7 | 1/7 | 0.000 | 0.000 | 0.000 | 0.000 |

| good | 1/7 | 1/7 | 1/7 | 0.000 | 0.000 | 0.000 | 0.000 |

| and | 1/7 | 1/7 | 1/7 | 0.000 | 0.000 | 0.000 | 0.000 |

| affordable | 1/7 | 1/7 | 0 | 0.176 | 0.025 | 0.025 | 0.000 |

| not | 0 | 1/7 | 0 | 0.477 | 0.000 | 0.068 | 0.000 |

| cheap | 0 | 0 | 1/7 | 0.477 | 0.000 | 0.000 | 0.068 |

Why log in idf?

- If we look into the above formula without log function, we notice the value of tf is small where as idf has much larger value. If we do not use log, idf dominates and any value of tf won’t matter.

- Log of 1 is 0. Hence when term t is contained in all documents, idf will be zero i.e. inverse similarity is zero and the documents are completely similar.

1 | from sklearn.feature_extraction.text import TfidfVectorizer |

Limitations

- tf-idf is based on BoW model. So, it does not capture position, sementics, co-occurance in different documents

- Only useful as a lexical level feature

Co-Occurance Matrix

Co-Occurance Matrix is the representation of corpus in matrix form. It educates us with the information whether two entities co-occur and if so, how frequently. The key components of co-occurance matrix are Focus Word and window length.

Focus word & Window length

The word that is currently under study is the focus word and the number of words we are considering around the focus word is the window length. These are the context words. The words representing rows of the table are the focus words and those representing columns are the context words. For this article we are considering window length to be 2.

Objective

- Identify the number of context words for each focus word in the corpus for the given window length

Co-occurance matrix preserves the semantic relationship between words. It can be used anytime once computed.

| ↓F - C→ | this | product | is | good | and | affordable | not | cheap |

|---|---|---|---|---|---|---|---|---|

| this | 3 | 3 | 3 | 0 | 0 | 0 | 0 | 0 |

| product | 3 | 3 | 3 | 2 | 0 | 0 | 1 | 0 |

| is | 3 | 3 | 4 | 4 | 3 | 1 | 1 | 0 |

| good | 0 | 2 | 4 | 3 | 3 | 1 | 1 | 1 |

| and | 0 | 0 | 3 | 3 | 3 | 2 | 1 | 1 |

| affordable | 0 | 0 | 1 | 1 | 2 | 2 | 0 | 0 |

| not | 0 | 1 | 1 | 1 | 1 | 0 | 1 | 0 |

| cheap | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 |

F → Focus Word & C → Context Word

Let’s go through an example to make it more clear.

Focus Word: good

Window Length: 2

Context Words: left → product, is, not

right → and, is, affordable, cheap

Though window length is 2, why do we see more words on either side of the context? This is because words {product, is} lie within 2 context distance on the left of focus word good in review r1 and r3. But in case of review r2, the context words are {is, not}. So the set of context words become {product, is, not} on the left side of the focus word. Similarly the set becomes {and, is, affordable, cheap} on the right side.

1 | import re |

This result variable stores the final co-occurance matrix.

Limitation

- Become very complex (insenly large dimension) for large corpus

Solution

- Singular value decomposition (SVD) and Principal Component Analysis (PCA) are two eigenvalue methods used to reduce a high-dimensional dataset into fewer dimensions while retaining important information